Earlier in the year I was asked by a dentist to build him a website. Not like a clinic website or a patient UI, just something he could use to show his skills to the wider audience, almost like a portfolio.

I decided to start with Vue.JS, I had never used it but it ended up being super easy to setup. Using it is more touch and go but overall the experience was quite enjoyable.

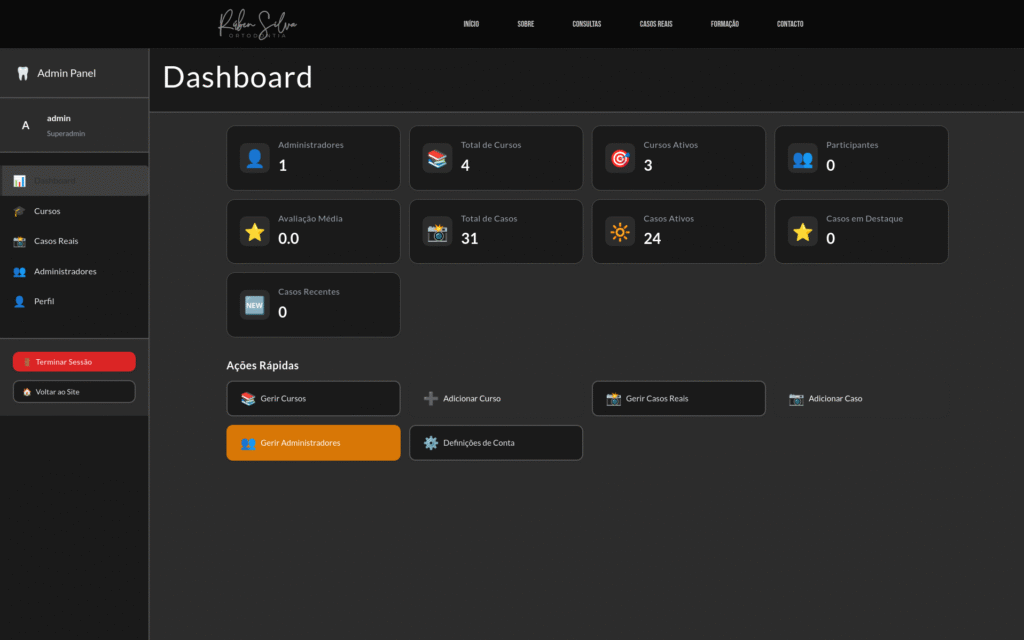

The website features dynamic data and visualization based on database objects and, obviously, has an administrative backend portion where the dynamic content can be added, modified, removed, etc..

To start the project I created a sort of prototype of a website with random gibberish and stock photos just to give an idea about what it could look like. This is where I used my own cloud server, I gave the dentist a folder in Nextcloud and he created a text document with all the changes he would like me to perform, and I would perform the changes and they were added.

I created tasks for the project in Plane, not for everything but for bigger stuff. This was mostly for me to keep track of the work that I had been doing as well as the tasks from the shared document in Nextcloud.

This was also my first time implementing tracking software in a website. In this case we used Meta’s Pixel. In there we can see who visited the website and later, if we run campaigns, we can target them at the people that checked the website out.

Here we can see a video of the website populated with real data.

I think the biggest lesson from creating the website was seeing the amount of traffic that comes in from bots. Everyday you get hundreds of requests for multiple of the pages of the website and all of it comes from google bots for search indexing, perplexity bots, aka, data crawlers for LLM training and also open ai, etc. It really sucks because you can’t even index the amount of page hits to real life people seeing the data. Even if the AI opens the website nothing says that the data reaches the final user.

I obviously also saw the bad actors and good actors trying that spam recently created websites with the most basic attack attempts like reading environment files (.env, .conf, etc).

It’s a shame, really, the internet used to be more neighbourly.